Connections between AI, Science and Art

Two books of Eric Kandel, Nobel Prize winner in Physiology or Medicine, motivated us for this post. Eric Kandel is building bridges between Art and Science, in particularly between Modern Art and Brain Science. His book "The Age of Insight: The Quest to Understand the Unconscious in Art, Mind, and Brain, from Vienna 1900 to the Present" inspired us to think about Explainable Artificial Intelligence via Science and Art and his other book - "Reductionism in Art and Brain Science: Bridging the Two Cultures" clearly explained how Brain Science and Abstract Art are connected.

Both Artificial Intelligence and Neuroscience are fields of Cognitive Science - interdisciplinary study of cognition in humans, animals, and machines. Cognitive Science should help us to open "Artificial Intelligence Black Box" and move from unexplainable AI to XAI (Explainable AI).

In this post we will attempt to connect AI with Science and with Art. We will use the approach described by Kandel in his 'Reductionism in Art and Brain Science' book where he shows how reductionism, applied to the most complex puzzles can been used by science to explore these complexities.

CNN Deep Learning Lower Layers and 'Simple Cells'

Convolutional Neural Network breakthrough moment happened in 2012. Soon after "The best explanation of Convolutional Neural Networks on the Internet!" article explained in pictures that the first few layers of CNN are very simple colored pieces and edges.

In the middle of 20th century neuroscientists discovered that Human Visualization process works very similar: some neurons are specialized on very simple images. These "simple cells" were discovered by Torsten Wiesel and David Hubel in 1958. Based on these simple cells in 1989 Yann LeCun developed Convolutional Neural Network.

There are two general processes involved in perception: top-down and bottom-up. Getting simple pieces (bottom-up processing) is combined with existing knowledge (top-down processing). This combination of two processes is similar to popular approach in deep learning where pre-trained models are used as the starting point for training.

The article about CNN history: "A Brief History of Computer Vision (and Convolutional Neural Networks)" describes in more details how neuroscience affected CNN.

Simple Images in Abstract Art

Art perception is also based on two strategies: bottom-up and top-down processes. Understanding of these processes appeared in art much earlier than in science: in 1907 Pablo Picasso and George Braque started Cubism movement.

Picasso and Braque invented a new style of paintings composed of simple bottom-up images. Cubism movement became the most influential art movement of the 20th century and it initiated other art movements like Abstract Art.

Why artists realized the existence of simple images in bottom-up process much earlier than scientists were able to find and prove it? Is this because complexity can be intuitively understood much earlier than logically explained?

Art to Science to AI

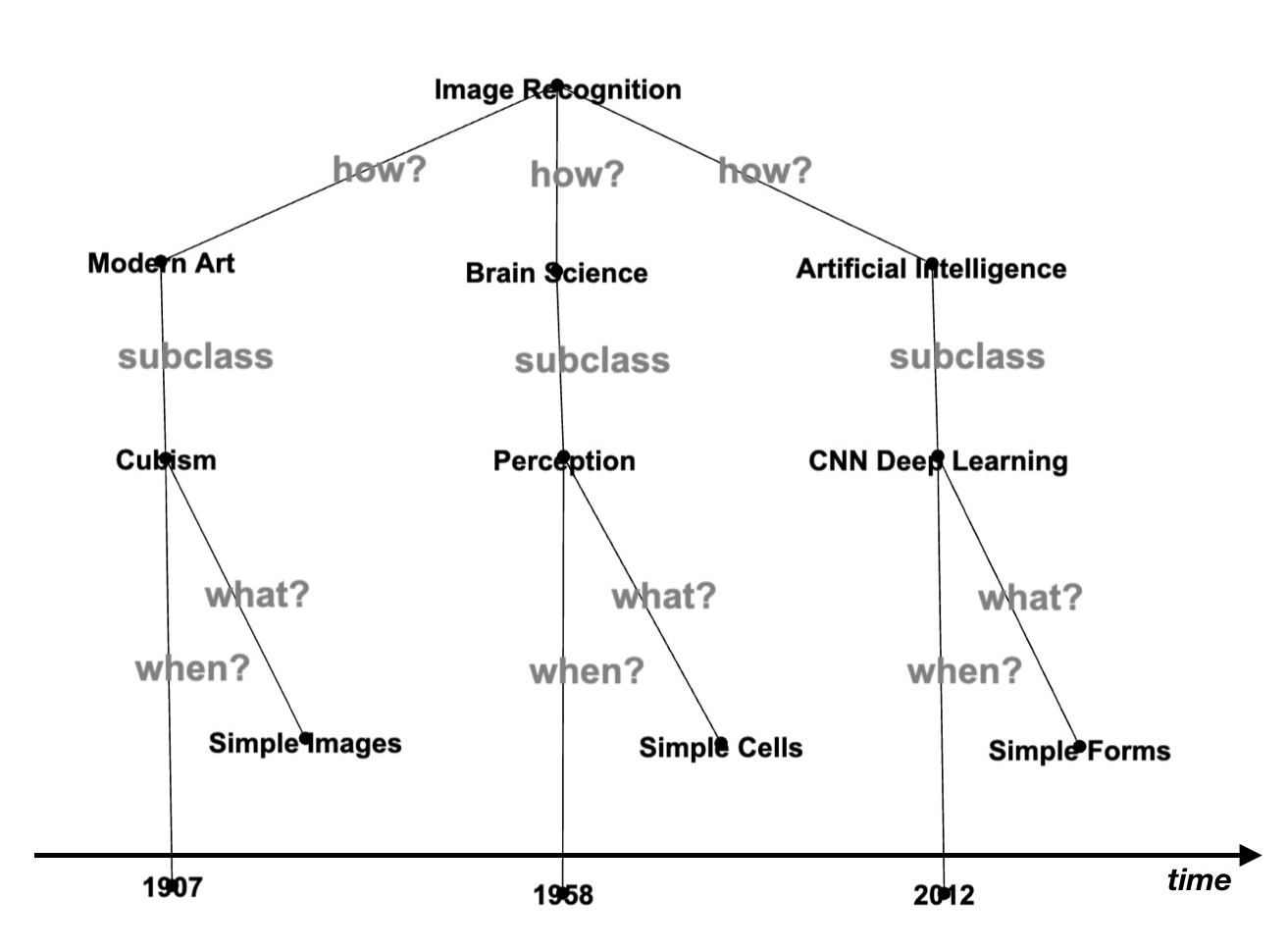

To build a bridge between Art and Artificial Intelligence we will 'connect the dots' of Mind Mapping via RDF triples - (subject, predicate, object).

import org.apache.spark.ml._

import org.apache.spark.ml.feature._

import org.apache.spark.sql.DataFrame

import org.graphframes.GraphFrame

val mindMapping=sc.parallelize(Array(

("Image Recognition","how?","Artificial Intelligence"),

("Artificial Intelligence","subclass","CNN Deep Learning"),

("CNN Deep Learning","what?","Simple Forms"),

("CNN Deep Learning","when?","2012"),

("Image Recognition","how?","Brain Science"),

("Brain Science","subclass","Perception"),

("Perception","what?","Simple Cells"),

("Perception","when?","1958"),

("Image Recognition","how?","Modern Art"),

("Modern Art","subclass","Cubism"),

("Cubism","what?","Simple Images"),

("Cubism","when?","1907")

)).toDF("subject","predicate","object")

display(mindMapping)

subject,predicate,object

Image Recognition,how?,Artificial Intelligence

Artificial Intelligence,subclass,CNN Deep Learning

CNN Deep Learning,what?,Simple Forms

CNN Deep Learning,when?,2012

Image Recognition,how?,Brain Science

Brain Science,subclass,Perception

Perception,what?,Simple Cells

Perception,when?,1958

Image Recognition,how?,Modern Art

Modern Art,subclass,Cubism

Cubism,what?,Simple Images

Cubism,when?,1907On Mind Mapping RDF triples we will build knowledge graph. We will use the same technique as for Word2Vec2Graph model - Spark GraphFrames.

val graphNodes=mindMapping.select("subject").

union(mindMapping.select("object")).distinct.toDF("id")

val graphEdges=mindMapping.select("subject","object","predicate").

distinct.toDF("src","dst","edgeId")

val graph = GraphFrame(graphNodes,graphEdges)Query the Knowledge Graph

As a graph query language we will use Spark GraphFrames 'find' function.

Get triples with 'how?' predicate:

val line=graph.

find("(a) - [ab] -> (b)").

filter($"ab.edgeId"==="how?").select("a.id","ab.edgeId","b.id").

toDF("node1","edge12","node2")

display(line)

node1,edge12,node2

Image Recognition,how?,Modern Art

Image Recognition,how?,Artificial Intelligence

Image Recognition,how?,Brain ScienceShow them in 'motif' language:

val line=graph.

find("(a) - [ab] -> (b)").

filter($"ab.edgeId"==="how?").select("a.id","ab.edgeId","b.id").

map(s=>("(" +s(0).toString + ") - [" + s(1).toString +"] -> (" + s(2).toString + ");" ))

display(line)

value

(Image Recognition) - [how?] -> (Modern Art);

(Image Recognition) - [how?] -> (Artificial Intelligence);

(Image Recognition) - [how?] -> (Brain Science);Connect two triples with the second predicate equal 'when?'

val line=graph.

find("(a) - [ab] -> (b); (b) - [bc] -> (c) ").

filter($"bc.edgeId"==="when?").

select("a.id","ab.edgeId", "b.id","bc.edgeId","c.id").

toDF("node1","edge12","node2","edge23","node3")

display(line.orderBy('node3))

node1,edge12,node2,edge23,node3

Modern Art,subclass,Cubism,when?,1907

Brain Science,subclass,Perception,when?,1958

Artificial Intelligence,subclass,CNN Deep Learning,when?,2012In 'motif':

val line=graph.

find("(a) - [ab] -> (b); (b) - [bc] -> (c) ").

filter($"bc.edgeId"==="when?").

select("a.id","ab.edgeId", "b.id","bc.edgeId","c.id").

map(s=>("(" +s(0).toString+") - ["+s(1).toString+"] -> ("+s(2).toString+") - ["+s(3).toString+"] -> ("+s(4).toString+")"))

display(line)

value

(Artificial Intelligence) - [subclass] -> (CNN Deep Learning) - [when?] -> (2012)

(Brain Science) - [subclass] -> (Perception) - [when?] -> (1958)

(Modern Art) - [subclass] -> (Cubism) - [when?] -> (1907)Create phrases of two triple with the last object like 'simple':

val line=graph.

find("(a) - [ab] -> (b); (b) - [bc] -> (c) ").

filter($"c.id".rlike("Simple")).select("a.id","b.id","c.id").

map(s=>("In "+s(0).toString+" area "+s(1).toString+" discovered "+s(2).toString.toLowerCase))

display(line)In Modern Art area Cubism discovered simple images

In Brain Science area Perception discovered simple cells

In Artificial Intelligence area CNN Deep Learning discovered simple forms

Create phrases with all triples:

val line=graph.

find("(a) - [ab] -> (b); (b) - [bc] -> (c); (c) - [cd] -> (d); (c) - [ce] -> (e) ").

filter($"cd.edgeId"==="when?").filter($"ce.edgeId"==="what?").

select("a.id","b.id","c.id","d.id","e.id").map(s=>("For " +s(0).toString.toLowerCase+" "+s(4).toString.toLowerCase+" were discovered by "+s(1).toString+" ("+ s(2).toString+") in "+s(3).toString))

display(line)For image recognition simple images were discovered by Modern Art (Cubism) in 1907

For image recognition simple cells were discovered by Brain Science (Perception) in 1958

For image recognition simple forms were discovered by Artificial Intelligence (CNN Deep Learning) in 2012

Graph Image

Convert to dot language, join lines with 'digraph {}' and use Gephi tool for graph visualization.

def graph2dot(graph: GraphFrame): DataFrame = {

graph.edges.distinct.

map(s=>("\""+s(0).toString +"\" -> \""

+s(1).toString +"\""+" [label=\""+(s(2).toString)+"\"];")).

toDF("dotLine") digraph {

"Perception" -> "Simple Cells" [label="what?"];

"CNN Deep Learning" -> "2012" [label="when?"];

"Cubism" -> "Simple Images" [label="what?"];

"Cubism" -> "1907" [label="when?"];

"Artificial Intelligence" -> "CNN Deep Learning" [label="subclass"];

"Brain Science" -> "Perception" [label="subclass"];

"Modern Art" -> "Cubism" [label="subclass"];

"Image Recognition" -> "Artificial Intelligence" [label="how?"];

"Image Recognition" -> "Brain Science" [label="how?"];

"Perception" -> "1958" [label="when?"];

"CNN Deep Learning" -> "Simple Forms" [label="what?"];

"Image Recognition" -> "Modern Art" [label="how?"];

}

More Questions...

- CNN Deep Learning was created based on Brain Science discovery of simple cells, not as magic. Why are we worry about unexplainable AI but do not worry about our brain work processes?

- More than 50 years before simple cells were discovered by Brain Science Cubism paintings showed us how our perception works. Why some very complicated problems were demonstrated by artists much earlier than were proved by scientists? Is it because intuition works much faster than logical thinking?

- Can some AI ideas be created based on intuition and not yet proved by science?

Next Post - Associations and Deep Learning

In the next post we will deeper look at deep learning for data associations.